- Topic1/3

13k Popularity

32k Popularity

15k Popularity

6k Popularity

172k Popularity

- Pin

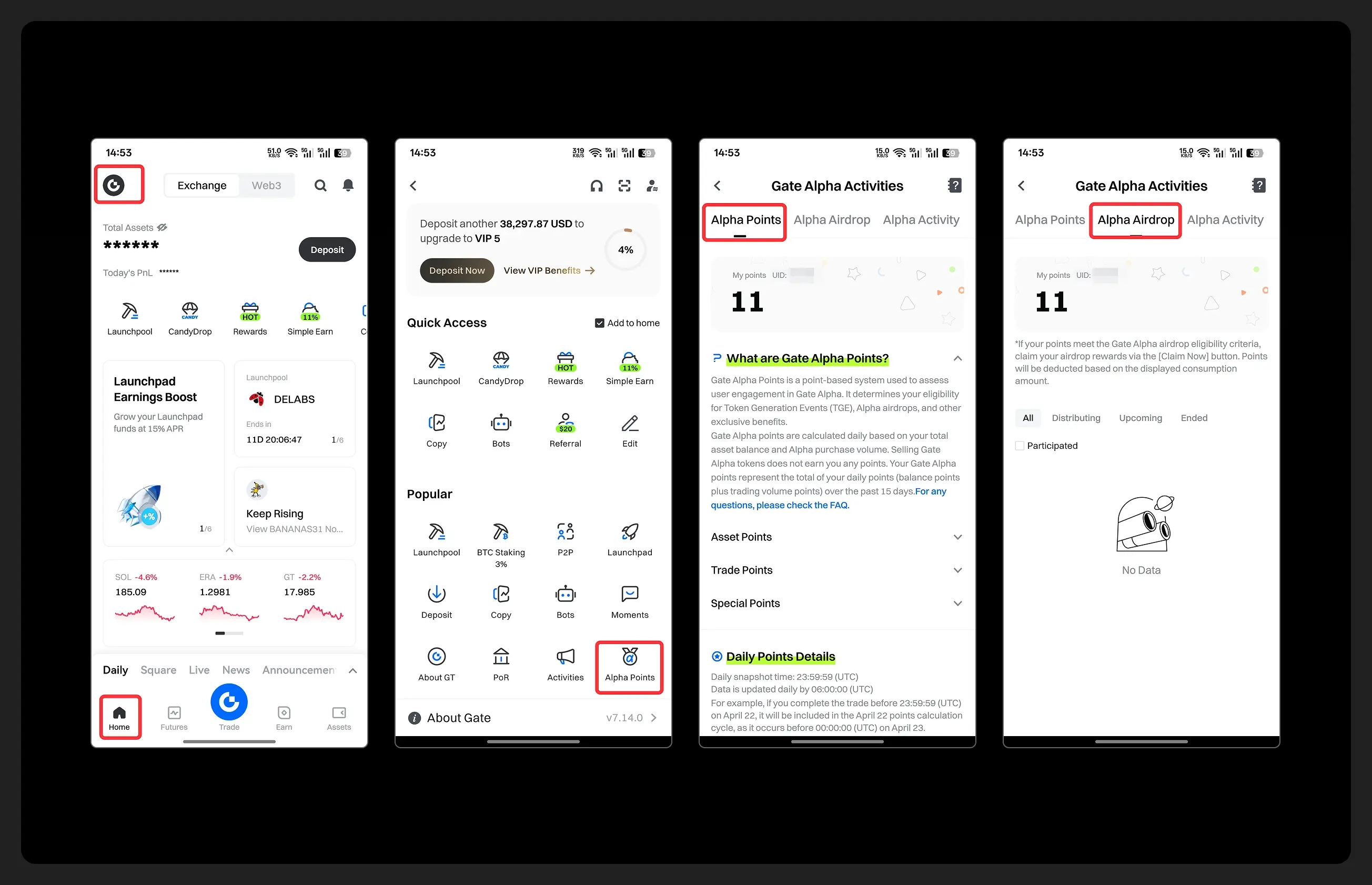

- Hey fam—did you join yesterday’s [Show Your Alpha Points] event? Still not sure how to post your screenshot? No worries, here’s a super easy guide to help you win your share of the $200 mystery box prize!

📸 posting guide:

1️⃣ Open app and tap your [Avatar] on the homepage

2️⃣ Go to [Alpha Points] in the sidebar

3️⃣ You’ll see your latest points and airdrop status on this page!

👇 Step-by-step images attached—save it for later so you can post anytime!

🎁 Post your screenshot now with #ShowMyAlphaPoints# for a chance to win a share of $200 in prizes!

⚡ Airdrop reminder: Gate Alpha ES airdrop is

- Gate Futures Trading Incentive Program is Live! Zero Barries to Share 50,000 ERA

Start trading and earn rewards — the more you trade, the more you earn!

New users enjoy a 20% bonus!

Join now:https://www.gate.com/campaigns/1692?pid=X&ch=NGhnNGTf

Event details: https://www.gate.com/announcements/article/46429

- Hey Square fam! How many Alpha points have you racked up lately?

Did you get your airdrop? We’ve also got extra perks for you on Gate Square!

🎁 Show off your Alpha points gains, and you’ll get a shot at a $200U Mystery Box reward!

🥇 1 user with the highest points screenshot → $100U Mystery Box

✨ Top 5 sharers with quality posts → $20U Mystery Box each

📍【How to Join】

1️⃣ Make a post with the hashtag #ShowMyAlphaPoints#

2️⃣ Share a screenshot of your Alpha points, plus a one-liner: “I earned ____ with Gate Alpha. So worth it!”

👉 Bonus: Share your tips for earning points, redemption experienc

- 🎉 The #CandyDrop Futures Challenge is live — join now to share a 6 BTC prize pool!

📢 Post your futures trading experience on Gate Square with the event hashtag — $25 × 20 rewards are waiting!

🎁 $500 in futures trial vouchers up for grabs — 20 standout posts will win!

📅 Event Period: August 1, 2025, 15:00 – August 15, 2025, 19:00 (UTC+8)

👉 Event Link: https://www.gate.com/candy-drop/detail/BTC-98

Dare to trade. Dare to win.

Kissinger talks about artificial intelligence: How do you view the international order in the era of artificial intelligence?

Original: The Beijing News

From Google's AlphaGo defeating human chess players to ChatGpt triggering heated discussions in the technology community, every advance in artificial intelligence technology affects people's nerves. There is no doubt that artificial intelligence is profoundly changing our society, economy, politics, and even foreign policy, and traditional theories often fail to explain the impact of all these. In the book "The Era of Artificial Intelligence and the Future of Humanity", Kissinger, the famous diplomat, Schmidt, the former CEO of Google, and Huttenlocher, the dean of the Schwarzman School of Computer Science at the Massachusetts Institute of Technology, sort out the past life of artificial intelligence from different perspectives. This life, and comprehensively discussed the various impacts that its development may bring to individuals, enterprises, governments, societies, and countries. Several top thinkers believe that as the capabilities of artificial intelligence become stronger and stronger, how to position the role of human beings will be a proposition that we must think about for a long time in the future. The following content is excerpted from "The Era of Artificial Intelligence and the Future of Humanity" with the authorization of the publisher, with deletions and revisions, subtitles are added by the editor.

Original Author | [US] Henry Kissinger / [US] Eric Schmidt / [US] Daniel Huttenlocher

**What will general artificial intelligence bring? **

Can humans and artificial intelligence approach the same reality from different perspectives, and can they complement each other and complement each other? Or do we perceive two different but partially overlapping realities: one that humans can explain rationally, and the other that artificial intelligence can explain algorithmically? If the answer is the latter, then artificial intelligence can perceive things that we have not yet perceived and cannot perceive—not only because we do not have enough time to reason about them in our way, but because they exist in a place that our minds cannot conceptualize. in the field. Humanity's pursuit of "complete understanding of the world" will change, and people will realize that in order to obtain certain knowledge, we may need to entrust artificial intelligence to obtain knowledge for us and report back to us. Regardless of the answer, as artificial intelligence pursues more comprehensive and extensive goals, it will appear to humans more and more like a "creature" that experiences and understands the world - a combination of tools, pets and minds. exist.

As researchers approach or achieve artificial general intelligence, the puzzle will only grow deeper. As we discussed in Chapter 3, AGI will not be limited to learning and performing specific tasks; instead, by definition, AGI will be able to learn and perform an extremely wide range of tasks, just as humans do. Developing artificial general intelligence will require enormous computing power, which may result in only a few well-funded organizations capable of creating such AI. As with current AI, while AGI may readily be deployed decentralized, given its capabilities, its applications will necessarily be limited. Limitations could be imposed on AGI by only allowing approved organizations to operate it. The question then becomes: Who will control AGI? Who will authorize its use? Is democracy still possible in a world where a few "genius" machines are run by a few organizations? In this case, what will human-AI cooperation look like?

If artificial general intelligence does emerge into the world, it will be a major intellectual, scientific, and strategic achievement. But even if it fails to do so, artificial intelligence can also bring about a revolution in human affairs. AI's drive and ability to respond to emergencies (or unexpected events) and provide solutions sets it apart from previous technologies. If left unregulated, AI could deviate from our expectations and, by extension, our intent. The decision of whether to limit it, cooperate with it, or obey it will not be made solely by humans. In some cases, this will be determined by the AI itself; in others, it will depend on various enabling factors. Humans may be engaged in a "race to the bottom".

As AI automates processes, allows humans to explore vast amounts of data, and organizes and restructures the physical and social realms, those first movers may gain a first-mover advantage. Competitive pressures could force parties to race to deploy AGI without sufficient time to assess the risks or simply ignore them. Ethics about artificial intelligence are essential. Each individual decision—to limit, to cooperate, or to comply—may or may not have dramatic consequences, but when combined, the impact is multiplied.

These decisions cannot be made in isolation. If humanity wants to shape the future, it needs to agree on common principles that guide every choice. It is true that collective action is difficult, and sometimes even impossible, but individual actions without the guidance of a common moral norm will only lead to greater turmoil and chaos for mankind as a whole. Those who design, train, and work with AI will be able to achieve goals of a scale and complexity hitherto unattainable for humans, such as new scientific breakthroughs, new economic efficiencies, new forms of security, and social surveillance new dimension. And in the expansion of artificial intelligence and its uses, those who are not empowered may feel that they are being watched, studied, and acted upon by forces they do not understand, and that are not of their own design or choice. This power operates in an opaque manner that in many societies cannot be tolerated by traditional human actors or institutions. AI designers and deployers should be prepared to address these issues, starting with explaining to non-technical people what AI is doing, what it “knows,” and how it does it. The dynamic and emerging nature of AI creates ambiguity in at least two ways. First, artificial intelligence may perform as we expect it to, but produce results that we cannot foresee. These outcomes may lead humanity into places its creators never expected, just as politicians in 1914 failed to recognize that the old logic of military mobilization coupled with new technology would drag Europe into war. Artificial intelligence can also have serious consequences if it is deployed and used without careful consideration.

These consequences can be small, such as a life-threatening decision made by a self-driving car, or extremely significant, such as a serious military conflict. Second, in some application domains, AI may be unpredictable, acting completely unexpected. Taking AlphaZero as an example, it developed a style of chess that humans have never imagined in thousands of years of chess history, just according to the instructions of "winning chess". While humans may be careful to prescribe the goals of an AI, as we give it greater latitude, its path to that goal could surprise us, and even panic us. Therefore, both the targeting and mandate of AI need to be carefully designed, especially in areas where its decisions can be lethal. AI should neither be viewed as autonomous, unattended, nor allowed to take irrevocable actions without supervision, surveillance, or direct control. Artificial intelligence was created by humans, so it should also be supervised by humans. But one of the challenges of AI in our time is that people with the skills and resources to create it don’t necessarily have the philosophical perspective to understand its broader implications. Many creators of artificial intelligence focus primarily on the applications they are trying to achieve and the problems they want to solve: they may not stop to consider whether the solution will produce a historic revolution, or how their technology will affect different crowd. The age of artificial intelligence needs its own Descartes and Kant to explain what we have created and what it means for humans.

We need to organize rational discussions and negotiations involving governments, universities, and private industry innovators, and the goal should be to establish limits to practical action like those that govern individual and organizational action today. AI has attributes that share some of the same properties as currently regulated products, services, technologies and entities, but differ from them in some important respects by lacking a fully defined conceptual and legal framework of its own. For example, the ever-evolving, provocative nature of AI poses a regulatory challenge: who and how it operates in the world may vary across domains and evolve over time, and not always in a predictable manner. presented in a manner. The governance of people is guided by a code of ethics. AI needs a moral compass of its own that reflects not only the nature of the technology but also the challenges it presents.

Often, established principles do not apply here. In the Age of Faith, when defendants in Inquisition faced a battle verdict, the court could decide the crime, but God decided who was victorious. In the age of reason, human beings determined guilt according to the precepts of reason, and judged crimes and punished them according to concepts such as causality and criminal intent. But artificial intelligence does not operate on human reason, nor does it have human motivation, intention, or self-reflection. Thus, the introduction of AI will complicate existing principles of justice that apply to humans.

When an autonomous system acts based on its own perceptions and decisions, do its creators take responsibility? Or should the actions of AI not be confused with those of its creators, at least in terms of culpability? If AI is to be used to detect signs of criminal behavior, or to help determine whether someone is guilty, must the AI be able to "explain" how it came to its conclusions so that human officials can trust it? Furthermore, at what point and in what context of technological development should AI be subject to international negotiations? This is another important topic of debate. If probed too early, the technology's development could be stymied, or it could be tempted to hide its capabilities; delayed too long, it could have devastating consequences, especially in the military. This challenge is compounded by the difficulty of designing effective verification mechanisms for a technology that is ethereal, obscure, and easily disseminated. The official negotiators will necessarily be governments, but there will also be a need for a voice for technologists, ethicists, the companies that create and operate AI, and others outside the field.

The dilemmas posed by AI have far-reaching implications for different societies. Much of our social and political life today takes place on AI-enabled online platforms, and democracies in particular rely on these information spaces for debate and communication, shaping public opinion and giving them legitimacy. Who, or what agency, should define the role of technology? Who should regulate it? What role should individuals using AI play? What about companies that produce artificial intelligence? What about social governments deploying it? As part of the solution to these problems, we should try to make it auditable, that is, its processes and conclusions are both inspectable and correctable. In turn, whether corrections can be implemented will depend on the ability to refine the principles for the form of AI perception and decision-making. Morality, will, and even causation do not fit well in the world of autonomous artificial intelligence. Similar problems arise at most levels of society, from transportation to finance to medicine.

Consider the impact of artificial intelligence on social media. With recent innovations, these platforms have quickly become an important aspect of our public life. As we discussed in Chapter 4, the features Twitter and Facebook use to highlight, limit, or outright ban content or individuals all rely on artificial intelligence, a testament to its power. The use of AI for unilateral and often opaque promotion or removal of content and concepts is a challenge for all nations, especially democracies. Is it possible for us to retain dominance as our social and political life increasingly shifts towards domains governed by artificial intelligence, and we can only rely on such management to navigate them?

The practice of using artificial intelligence to process large amounts of information also presents another challenge: the artificial intelligence increases the distortion of the world to cater to human instinctive preferences. This is an area where artificial intelligence can easily amplify our cognitive biases, yet we still resonate with them. With these voices, faced with a diversity of choices, and empowered to choose and sift, people will receive a flood of misinformation. Social media companies do not promote extreme and violent political polarization through their newsfeeds, but it is clear that these services do not lead to the maximization of enlightened discourse either.

Artificial intelligence, free information and independent thinking

So, what should our relationship with AI look like? Should it be constrained, empowered, or treated as a partner in governing these domains? There is no doubt that the dissemination of certain information, especially deliberately created false information, can cause damage, division and incitement. Therefore some restrictions are needed. However, the condemnation, attack, and suppression of "harmful information" are now too loose, and this should also lead to reflection.

In a free society, the definition of harmful and disinformation should not be confined to the purview of corporations. However, if such responsibilities are entrusted to a government group or agency, that group or agency should operate according to established public standards and through verifiable procedures to avoid exploitation by those in power. If it is entrusted to an AI algorithm, the algorithm's objective function, learning, decisions, and actions must be clear and subject to external scrutiny, and at least some form of human appeal.

Of course, different societies will come to different answers to this. Some societies may emphasize freedom of expression, perhaps to varying degrees based on their relative understanding of individual expressions, and may thus limit the role of AI in moderating content. Each society chooses which ideas it values, which can lead to complex relationships with multinational network platform operators. AI is as absorbent as a sponge, learning from humans, even as we design and shape it.

Therefore, not only are the choices of each society different, but also each society’s relationship with AI, perception of AI, and the way AI imitates humans and learns from human teachers are also different. But one thing is certain, and that is that the human search for facts and truth should not cause a society to experience life through an ill-defined and untestable filter. The spontaneous experience of reality, for all its contradictions and complexities, is an important aspect of the human condition, even if it leads to inefficiencies or errors.

Artificial Intelligence and International Order

Across the globe, countless questions are waiting to be answered. How can artificial intelligence online platforms be regulated without sparking tensions among countries concerned about their safety? Will these online platforms erode traditional notions of national sovereignty? Will the resulting changes bring a polarization to the world not seen since the collapse of the Soviet Union? Does the small Congress object? Will attempts to mediate these consequences be successful? Or is there any hope of success?

As the capabilities of artificial intelligence continue to increase, how to position the role of humans in cooperation with artificial intelligence will become increasingly important and complex. We can imagine a world in which humans increasingly respect the opinions of artificial intelligence on issues of increasing importance. In a world where offensive adversaries are successfully deploying AI, can leaders on the defensive side decide not to deploy their own AI and take responsibility for it? Even they aren't sure how this deployment will evolve. And if AI has the superior ability to recommend a course of action, is there reason for decision makers to accept it, even if that course of action requires some level of sacrifice? How can man know whether such a sacrifice is essential to victory? If it is essential, are policymakers really willing to veto it? In other words, we may have no choice but to foster artificial intelligence. But we also have a responsibility to shape it in a way that is compatible with the future of humanity. Imperfection is one of the norms of the human experience, especially when it comes to leadership.

Often, policymakers are overwhelmed by intolerable concerns. Sometimes, their actions are based on false assumptions; sometimes, they act out of pure emotion; and still other times, ideology distorts their vision. Whatever strategies are used to structure human-AI partnerships, they must be adapted to humans. If artificial intelligence exhibits superhuman abilities in certain domains, its use must be compatible with the imperfect human environment.

In the security arena, AI-enabled systems respond so quickly that adversaries may attempt attacks before the systems are operational. The result could be an inherently unstable situation comparable to that created by nuclear weapons. Yet nuclear weapons are framed within concepts of international security and arms control that have been developed over the past few decades by governments, scientists, strategists, and ethicists through continuous refinement, debate, and negotiation. Artificial intelligence and cyber weapons do not have a similar framework.

In fact, governments may be reluctant to acknowledge their existence. Countries—and possibly tech companies—need to agree on how to coexist with weaponized AI. The proliferation of AI through government defense functions will alter the international balance and the computing upon which it is maintained in our time. Nuclear weapons are expensive and difficult to conceal due to their size and structure. In contrast, artificial intelligence can run on computers that can be found everywhere. Since training a machine learning model requires expertise and computing resources, creating an artificial intelligence requires resources at the level of a large company or country; and since the application of artificial intelligence is carried out on relatively small computers, it is bound to be widely used , including in ways we didn't expect. Will anyone with a laptop, an internet connection, and a dedication to prying into the dark side of AI finally have access to an AI-powered weapon? Will governments allow actors with close or no ties to them to use AI to harass their adversaries? Will terrorists plan AI attacks? Will they be able to attribute these activities to States or other actors?

In the past, diplomacy took place in an organized and predictable arena; today, its access to information and scope of action will be greatly expanded. The clear boundaries that used to be formed by geographical and linguistic differences will gradually disappear. AI translation will facilitate dialogue without requiring not only linguistic but also cultural savvy as previous translators did. AI-powered online platforms will facilitate cross-border communication, while hacking and disinformation will continue to distort perceptions and assessments. As the situation becomes more complex, it will become more difficult to develop enforceable agreements with predictable outcomes.

The possibility of combining AI capabilities with cyberweapons adds to this dilemma. Humanity sidesteps the nuclear paradox by making a clear distinction between conventional weapons (considered in harmony with conventional strategy) and nuclear weapons (considered the exception). Once the destructive power of nuclear weapons is released, it is indiscriminate and regardless of the target; while conventional weapons can distinguish the target. But cyberweapons that can both identify targets and wreak havoc on a massive scale obliterate that distinction.

Added to the fuel of artificial intelligence, these weapons will become even more unpredictable and potentially more destructive. At the same time, when these weapons are cruising around the network, it is impossible to determine their attribution. They cannot be detected because they are not bulky like nuclear weapons; they can also be carried on USB sticks, which facilitates proliferation. In some forms, these weapons are difficult to control once deployed, all the more so given the dynamic and emerging nature of artificial intelligence.

This situation challenges the premises of the rules-based world order. In addition, it has made it imperative to develop concepts related to AI arms control. In the age of artificial intelligence, deterrence will no longer follow historical norms, nor will it do so. At the dawn of the nuclear age, a conceptual framework for nuclear arms control was developed based on insights from discussions among leading professors and scholars (with experience in government) at Harvard, MIT, and Caltech, which in turn led to created a system (and the institutions to implement it in the United States and other countries).

As important as academic thinking is, it is implemented separately from the Pentagon's considerations of conventional warfare—a new addition, not a modification. But the potential military uses of artificial intelligence are broader than nuclear weapons, and the distinction between offense and defense is not clear, at least for now. In a world so complex, inherently unpredictable, artificial intelligence becomes yet another possible source of misunderstanding and error, and sooner or later great powers with high-tech capabilities will have to engage in an ongoing dialogue.

Such a dialogue should focus on one fundamental issue: avoiding disaster and surviving it. Artificial intelligence and other emerging technologies, such as quantum computing, appear to be making realities beyond human perception more accessible. Ultimately, however, we may find that even these techniques have their limitations. Our problem is that we have not grasped their philosophical implications. We are being propelled forward by them involuntarily, not consciously.

The last time there was a major shift in human consciousness was during the Enlightenment, and this shift happened because new technologies produced new philosophical insights that were in turn disseminated through technology (in the form of the printing press). In our time, new technologies have been developed without corresponding guiding philosophies. Artificial intelligence is a huge undertaking with far-reaching potential benefits. Humans are working hard to develop artificial intelligence, but are we using it to make our lives better or worse? It promises stronger medicines, more efficient and equitable health care, more sustainable environmental practices, and other visions of progress. At the same time, however, it can also distort information, or at least complicate the process of consuming information and identifying truth, and thereby weakening the ability of independent reasoning and judgment of some people.

In the end, a "meta" question emerges: Can humans satisfy the need for philosophy with the "assistance" of artificial intelligence with different interpretations and understandings of the world? Humans don't fully understand machines, but will we eventually make peace with them and change the world? Immanuel Kant begins his preface to his Critique of Pure Reason with this point:

Human reason has this peculiar destiny, that in one of the branches of all its knowledge it is beset by problems which cannot be disregarded as it is imposed upon itself by the very nature of reason itself, but which Because it surpasses all the abilities of reason, it cannot be solved.

In the centuries since, humans have explored these questions in depth, some of which touch on the nature of the mind, reason, and even reality. Humanity has made great breakthroughs, but it has also encountered many of the limitations that Kant posited: a realm of questions it cannot answer, a realm of facts it cannot fully understand. The emergence of artificial intelligence, which brings the ability to learn and process information that humans cannot achieve with reason alone, may allow us to make progress on questions that have proven beyond our ability to answer. But success will raise new questions, some of which we have tried to illuminate in this book. Human intelligence and artificial intelligence are at a juncture where the two will converge in pursuits on a national, continental, or even global scale. Understanding this shift, and developing a guiding ethical code around it, will require the collective wisdom, voice and shared commitment of all segments of society, including scientists and strategists, politicians and philosophers, clergy and CEOs . This commitment should not only be made within countries, but also between countries. What kind of partnership can we have with artificial intelligence, and what kind of reality will emerge from it? Now, it's time to define this.

Author: / [US] Henry Kissinger / [US] Eric Schmidt / [US] Daniel Huttenlocher

Excerpt / Liu Yaguang

Editor/Liu Yaguang

Proofreading / Zhao Lin